For those of you that don’t already know about Sonar you are missing an important tool in your quality assessment arsenal. Sonar is an open source tool that is a foundational platform to manage your software’s quality. The image below shows one of the main dashboard views that teams can use to get insights into their software’s health.

The dashboard provides rollup metrics out of the box for:

- Duplication (probably the biggest Design Debt in many software projects)

- Code coverage (amount of code touched by automated unit tests)

- Rules compliance (identifies potential issues in the code such as security concerns)

- Code complexity (an indicator of how easy the software will adapt to meet new needs)

- Size of codebase (lines of code [LOC])

Before going into how to use these metrics to assess whether to promote builds to downstream environments, I want to preface the conversation with the following note:

Code analysis metrics should NOT be used to assess teams and are most useful when considering how they trend over time

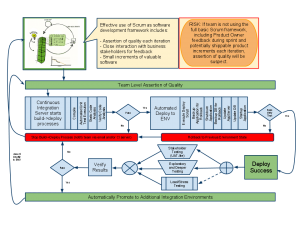

Now that we have this important note out of the way and, of course, nobody will ever use these metrics for “evil”, lets discuss pulling data from Sonar to automate assessments of builds for promotion to downstream environments. For those that are unfamiliar with automated promotion, here is a simple, happy example:

A development team makes some changes to the automated tests and implementation code on an application and checks their changes into source control. A continuous integration server finds out that source control artifacts have changed since the last time it ran a build cycle and updates its local artifacts to incorporate the most recent changes. The continuous integration server then runs the build by compiling, executing automated tests, running Sonar code analysis, and deploying the successful deployment artifact to a waiting environment usually called something like “DEV”. Once deployed, a set of automated acceptance tests are executed against the DEV environment to validate that basic aspects of the application are still working from a user perspective. Sometime after all of the acceptance tests pass successfully (this could be twice a day or some other timeline that works for those using downstream environments), the continuous integration server promotes the build from the DEV environment to a TEST environment. Once deployed, the application might be running alongside other dependent or sibling applications and integration tests are run to ensure successful deployment. There could be more downstream environments such as PERF (performance), STAGING, and finally PROD (production).

The tendency for many development teams and organizations is that if the tests pass then it is good enough to move into downstream environments. This is definitely an enormous improvement over extensive manual testing and stabilization periods on traditional projects. An issue that I have still seen is the slow introduction of software debt as an application is developed. Highly disciplined technical practices such as Test-Driven Design (TDD) and Pair Programming can help stave off extreme software debt but these practices are still not common place amongst software development organizations. This is not usually due to lack of clarity about these practices, excessive schedule pressure, legacy code, and the initial hurdle to learning how to do these practices effectively. In the meantime, we need a way to assess the health of our software applications beyond just tests passing and in the internals of the code and tests themselves. Sonar can be easily added into your infrastructure to provide insights into the health of your code but we can go even beyond that.

The Sonar Web Services API is quite simple to work with. The easiest way to pull information from Sonar is to call a URL:

http://nemo.sonarsource.org/api/resources?resource=248390&metrics=technical_debt_ratio

This will return an XML response like the following:

<resources>

<resource>

<id>248390</id>

<key>com.adobe:as3corelib</key>

<name>AS3 Core Lib</name>

<lname>AS3 Core Lib</lname>

<scope>PRJ</scope>

<qualifier>TRK</qualifier>

<lang>flex</lang>

<version>1.0</version>

<date>2010-09-19T01:55:06+0000</date>

<msr>

<key>technical_debt_ratio</key>

<val>12.4</val>

<frmt_val>12.4%</frmt_val>

</msr>

</resource>

</resources>

Within this XML, there is a section called <msr> that includes the value of the metric we requested in the URL, “technical_debt_ratio”. The ratio of technical debt in this Flex codebase is 12.4%. Now with this information we can look for increases over time to identify technical debt earlier in the software development cycle. So, if the ratio to increase beyond 13% after being at 12.4% 1 month earlier, this could tell us that there is some technical issues creeping into the application.

Another way that the Sonar API can be used is from a programming language such as Java. The following Java code will pull the same information through the Java API client:

Sonar sonar = Sonar.create("http://nemo.sonarsource.org");

Resource commons = sonar.find(ResourceQuery.createForMetrics("248390",

"technical_debt_ratio"));

System.out.println("Technical Debt Ratio: " +

commons.getMeasure("technical_debt_ratio").getFormattedValue());

This will print “Technical Debt Ratio: 12.4%” to the console from a Java application. Once we are able to capture these metrics we could save them as data to trend in our automated promotion scripts that deploy builds in downstream environments. Some guidelines we have used in the past for these types of metrics are:

- Small changes in a metric’s trend does not constitute immediate action

- No more than 3 metrics should be trended (the typical 3 I watch for Java projects are duplication, class complexity, and technical debt)

- The development should decide what are reasonable guidelines for indicating problems in the trends (such as technical debt +/- .5%)

In the automated deployment scripts, these trends can be used to stop deployment of the next build that passed all of its tests and emails can be sent to the development team regarding the metric culprit. From there, teams are able to enter the Sonar dashboard and drill down into the metric to see where the software debt is creeping in. Also, a source control diff can be produced to go into the email showing what files were changed between the successful builds that made the trend go haywire. This might be a listing per build and the metric variations for each.

This is a deep topic that this post just barely introduces. If your organization has a separate configuration management or operations group that managed environment promotions beyond the development environment, Sonar and the web services API can help further automate early identification of software debt in your applications before they pollute downstream environments.